Key insights from

Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy

By Cathy O'Neil

|

|

|

What you’ll learn

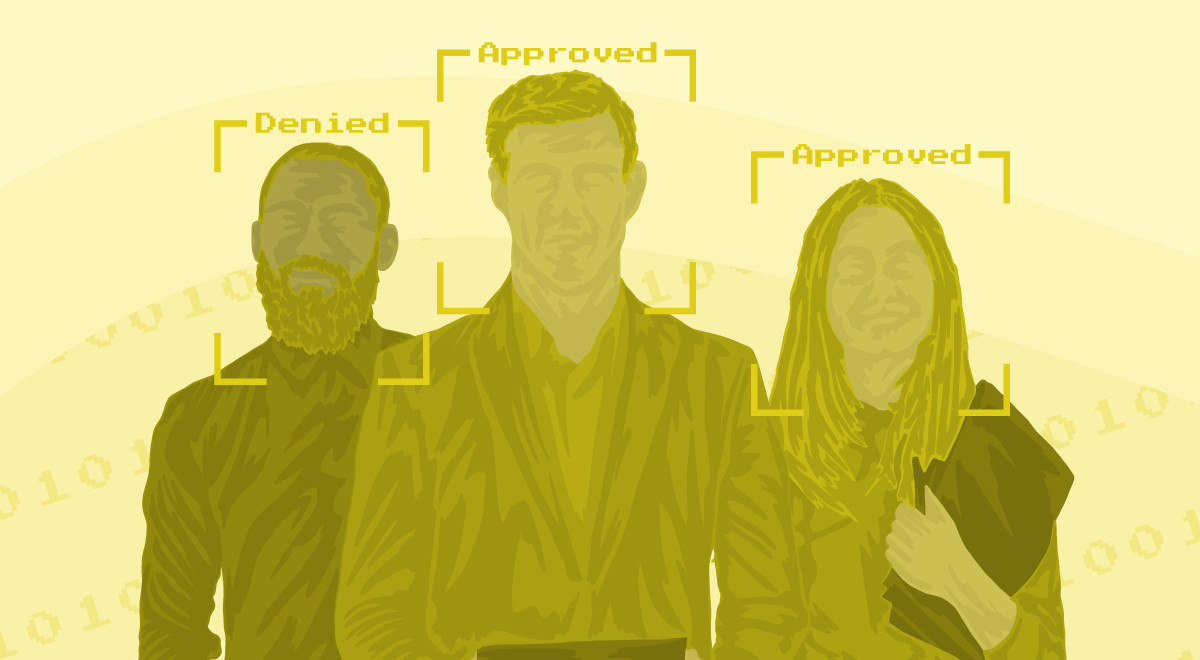

Algorithms aren’t always seamless strings of coding that translate reality into perfect equations. Rather, algorithms are human creations subject to the same mistakes their users often fall prey to, presenting missteps as insights and using the currency of fact to validate flaws. As the financial world, higher education, and the political realm bend to the numerical whims of algorithms, society grows further from the ideal it wishes to create and imperils those it strives to help. Mathematician Cathy O’Neil exposes the deceptive algorithms our culture has grown dangerously reliant upon, cautioning us to do the math for ourselves, while encouraging everyone to think more critically about the processes which are turning society into an imbalanced equation.

Read on for key insights from Weapons of Math Destruction.

|

|

1. Destructive algorithms are hard to recognize and difficult to avoid.

You don’t need a degree in calculus or advanced computer engineering to understand what an algorithm is. In fact, mathematicians and laymen alike use these kinds of “models” everyday, calculating information from the past to enhance future outcomes. There’s nothing wrong with well-functioning algorithms—they expedite highly intricate and time-consuming processes to make life easier. But many algorithms aren’t necessarily helpful, and the ones that are flooding society with a spring of entrancing equations are far from harmless. As the author writes, these “models are opinions embedded in mathematics.” Tethering unavoidable human fallibility to equations doesn’t remedy their sting, it simply hides their faults behind an unwavering mask of numbers.

The typical algorithm is a model composed of pertinent data that helps lead to a designated end. Whether or not this end is satisfactory alters the content of the algorithm thereafter. For instance, when deciding on what to make for dinner, the author considers her ultimate aim: pleasing her family and making sure her kids get the right amount of nourishing foods. With that in mind, she factors variables like dietary needs, likes, dislikes, and her own hectic schedule into her algorithm. Based on this data in accordance with her preference to cook an easy and healthy dinner for her family, she makes a particular meal. If her kids don’t finish their peas or simply swish their potatoes around their plate, the author registers that outcome and tweaks a few things in her algorithm for tomorrow night’s meal. These kinds of “dynamic models” are reasonable and correctable, and this one even answers that frustrating little question of “what’s for dinner?”

The more threatening algorithms, on the other hand, use similar processes but sweep much more ambiguous data into their calculations and negatively impact human lives (in ways that are much more devastating than simply eating a mediocre meal). The author calls these corrupted algorithms “weapons of math destruction,” and they unintentionally pull human preference, oversight, and error into their models to spew out falsely neutral statements about reality that then lead to misguided action. With goals that are difficult to quantify, such algorithms employ “proxies” or approximations of data, making their systems vague and incorrect, fueled by “feedback loops” that fail to detect when the system’s gone awry. In other words, these models handle much trickier issues than the question of what to eat for dinner, but they bypass truth with easy shortcuts and forget to ask if what they’re doing is really correct.

These algorithms are pervasive throughout the financial world, academia, and the justice system, and they typically exhibit three characteristics that maximize both their efficiency and their danger: “opacity, scale, and damage.” As the purposely obscured algorithms bring chaos to the world in ways that fly far below one’s conscious radar, they impact millions of people and threaten more lives than they purport to protect.

The real danger arrives when we forget that these models aren’t always truthful depictions of life itself, but distorted projections of our own imperfect selves. And more often than not, the image we see in the numerical mirror isn’t as pretty as we may want to admit.

|

|

2. Symptoms of poverty are not indications of crime; when algorithms reduce issues into explanations, they fail to uncover deep problems.

Many contemporary algorithms do little to combat the destructive history of racial prejudice. Black men disproportionately populate prisons and spend about 20% more time in confinement than white men found guilty of the same offenses. In order to address and correct this imbalance, courts applied technology to the task. Unfortunately, their attempts failed to go beyond simple explanations and fell victim to the same flawed thinking patterns their human creators perpetrated. Though the systems initiated throughout the judicial system begin with the noble aim of eliminating racism, they often accomplish the opposite—the present fails to leave history’s mistakes behind as deceptively skewed data is run through algorithms.

The “recidivism models” applied in 24 US states are just one proponent of this, attempting to avoid bias in the process of determining prison sentences. Typical recidivism concerns a person’s likelihood of committing another crime, and it often factors into trial outcomes. In the past, racial prejudice skewed these cases, so courts sought new ways to deliver verdicts without the pull of human error. To achieve a quantitative value for recidivism, scientists created algorithmic models, many of which use information sourced from the Level of Service-Inventory Revised (LSI-R) examination. Created in 1995, the LSI-R provides prisoners with streams of questions that statisticians determined would be significant to quantifying a prisoner’s “recidivism risk.” While this test doesn’t invoke race and actually aims to avoid prejudice, many of the questions it poses are deceptively flawed.

For instance, the examination features various questions about a person’s neighborhood or previous experience with the police. On the surface, these questions seem perfectly valid in predicting whether a criminal might become involved in more crime, but closer inspection reveals glaring oversights. Though the exam’s questions aren’t purposely pointed, they inevitably disadvantage people from impoverished areas with greater police presence. In 2013, young men living in New York who were black and Hispanic contributed to about 40% of police checks (of which, nearly all went without an arrest), despite the fact that they comprised less than 5% of the population. As a result, those from wealthier neighborhoods or families inadvertently gain an advantage in these cases. Though algorithms may seem to level the scales, the weight tips once again, conflating poverty and race.

If a prisoner answers affirmatively to these questions on the LSI-R, his “recidivism risk” rises accordingly. Meanwhile, only the statisticians know how these questions factor into the outcome, attaching varying degrees of importance to the data points beyond the test takers’ knowledge. This seemingly equalizing model perpetuates racial inequality by leading to a “feedback loop” that simply reaffirms its findings. Prisoners from impoverished areas receive greater scores of risk and rates of extended sentences, pushing them even further into lives of crime as they sit in prison and as they return to their communities.

Persistent problems expand when they’re squeezed through harmful algorithms that unknowingly mistake symptoms of poverty for indications of crime. These seemingly evenhanded models strive for equality but simply reify a destructive racial divide.

|

|

3. Search engines can’t tell you what you need—they simply toss you down the rabbit hole.

The world of contemporary advertising is a tangle of algorithms—and who can complain? These models draw your eye to that long-sought pair of shoes, or a vacation in Hawaii you’ve just been dying to take. At first scroll, there doesn’t seem to be anything wrong with receiving a rush of personalized ads, but when advertisers aim these algorithms toward people who need financial help, the models turn menacing. Similar to the algorithms practiced throughout the judicial system, many advertising models pose disproportionate harm to people living in disadvantaged circumstances. The only difference between the two is that this time, many advertisers employ these deceptive algorithms knowingly, cheating trusting scrollers to make a greater profit.

The nature of these “predatory ads” is in the name—they prey on unknowing victims by going undercover. Oftentimes, these schemes prey on immigrants, veterans, people from particular zip codes, and students who might receive financial aid to present ads with purposely misleading faux military seals and deceitful enrollment information to attract students to their hugely overpriced programs. What seems like a simple advertisement is actually a highly calculated campaign to draw a particular user’s click and eventually her cash, too.

“For-profit” colleges like the University of Phoenix, Vatterott College, and Corinthian College among many others are prime employers of such ad strategies, positioning their ads to reach struggling single mothers, veterans, and other people their algorithms deem profitable. With the detailed information provided by platforms like Google, these universities are able to locate people from impoverished areas or disadvantaged backgrounds by sifting through their data and finding their “pain point,” or their most lucrative insecurity. With that in mind, they market their deceptively calculated service to people who are unaware that they have fallen victim to a for-profit college’s false goodwill.

Before California’s attorney general brought various charges against Corinthian College, it was rolling in algorithmic success. With $120 million every year poured into its divisive scheme, the college gathered thousands of new students and $600 million in profits using harmful and covert means to appeal to potential customers. According to the author, their systems took aim at “isolated” people with “few people in their lives who care about them,” marketing their enormously expensive services ($68,800 for a degree that costs $10,000 elsewhere) to people who were likely hoping for direction and (more importantly) were eligible for federal aid. When the college went bankrupt, its students’ debt totaled $3.5 billion. To make matters worse, a study discovered that when it comes time to get a job, these kinds of expensive degrees from for-profit schools aren’t even as helpful as those from much less expensive community colleges.

Not only do algorithms of predatory ads exploit personal information about those they seek to attract, but they do so maliciously, perpetuating the dismal financial situations of their target audience, instead of improving them as promised. Thousands of students, 11% of the university population, are caught up in this system, and many of them are awash in debt, set back even further than where they started. It turns out, the degree wasn’t even worth the work.

|

|

|

|

4. University rankings crown schools with illusory prestige, but machines shouldn’t dictate educational value.

Typical universities also fall victim to misguided algorithms. If you’ve ever withstood the existentially painful process of the modern college application, you’re probably familiar with the famed list of schools released by U.S. News. Their site promises a ranking of the best, brightest, and most prestigious universities on the academic market, all sorted and placed on a shining, digitized pedestal for your perusal. Though these online rankings appear reasonable, harmless, and maybe even helpful for all those overwhelmed high school seniors (and parents too), the formula lurking beneath them intensifies the societal divot driven by a corrupted system of higher education.

Though the task of evaluating the overall merit of a particular university was an ambiguous one, U.S. News went ahead anyway, employing “proxies” to measure a school’s value. The magazine contrived its models based on factors like standardized test scores, acceptance rates, and the quantity of philanthropic alumni to form an algorithm that accounted for 75% of a school’s quantitative ranking. But the data they chose was far from impartial; rather, it employed factors from the most prestigious (and expensive) universities as its model.

In 1988, the first of these new and improved U.S. News rankings appeared, quickly consuming the education industry and forming a harmful, cyclical precedent for the system thereafter: the idea that all colleges and universities should strive to be like the priciest ones. Despite attempts by the Obama administration decades later to recalibrate the algorithms for greater relevance to students from all financial backgrounds, the initial ranking system stands because institutional leaders have resisted change.

Most dangerously, the algorithm of the ranking system perpetuates the boom of college tuition prices, which expanded by 500% from 1985 to 2013. As colleges siphon money to the creation of new sports facilities and campus amenities to draw more students and climb in the rankings, they push tuition costs higher to fund these endeavors. As a result, highly ranked schools and the degree of social prestige that comes with attending one of these institutions make the difference between financial ability and intelligence difficult to see.

After all, if Harvard and Princeton are at the top of the list then they must be the best educational options, admitting only the smartest of students. This isn’t always the case though. What the rankings don’t show is that often, those Ivy League students aren’t necessarily the most intelligent but the most financially advantaged, able to take part in expensive test and application tutoring programs, some running their parents up to $16,000. Now, under the roving eye of the U.S. News ranking and the substantial quantity of students it brings to campuses, more universities are pressed to tamper with data points or raise tuition prices to keep up with the best-ranked schools. Looking at the U.S. News ranking criteria, some institutions put less emphasis on education quality or on affordability, which are important concerns for families.

College has devolved into a consumer commodity, one that can be bought at ever-increasing prices. Much of the educational system is fueled by an algorithm that pushes students to earn debt like degrees—without always delivering the value that should come with sky-high prices.

|

|

5. Culture’s contemporary paper boy isn’t even a human—the news you see on your social media feed is hand delivered by an algorithm.

As you scroll through your morning Facebook feed, it’s all too easy to think the pictures flicking into view at the tap of your fingers are products of personal choice. But beyond the shining screen of your smartphone, reality is a bit less bright. The truth is, you have far less control over what you see on your social media feed than you might think, and more dangerously, you have far less control over how you respond to the particular things you see than you would ever care to imagine. With 1.5 billion active users spending about 39 minutes per day on their site—almost half consuming their news through the fragmented, fury-inspiring headlines on social media—the influence that Facebook’s underlying algorithms exert is enormous and oftentimes, frightening.

Waves of studies testify to Facebook’s power to impact things as significant as users’ voting patterns and their emotions. In a disturbing study from 2012, researchers tested the impact of Facebook news feeds on users’ feelings, dividing participants into two subsequent groups: one that received happier updates and another that was hit with more depressing information. What they found is terrifying and fascinating—participants’ feelings really did change based upon what they read in their feed. Simply witnessing, even digitally, the feelings of other people as reflected in emotionally charged posts caused those who read the posts to adopt the same moods and later parrot them in their own posts.

When social media moguls shake hands with politician hopefuls, the efficacy of the “microtargeting” model appears more advantageous (and more dangerous) than ever. Contemporary microtargeting is a lucrative algorithm for politicians, but it often exploits voters’ information and undermines their ability to see reality clearly. By presenting scrollers with onslaughts of personalized candidate information, appealing to aspects of the politician’s platform that would be most attractive to the browser, these algorithms twist perceptions. More dangerously, they do this to people who are completely oblivious that it’s even happening. A study conducted by Karrie Karahalios in 2013 discovered that 62% of those asked didn’t know that Facebook doled out individualized information to them. What you see isn’t up to you, and your actions thereafter might not be entirely self-directed, either.

This may sound a bit dystopian, but today’s Facebook profile is a brilliant breeding ground for politics to run amok. According to an investigative report released by the Guardian in 2015, the now-notorious organization Cambridge Analytica collected voters’ Facebook profiles to create personality assessments with the intent of personalizing television advertisements for Ted Cruz’s political canvassing.

This kind of political microtargeting purposely impacts peoples’ actions, oftentimes influencing citizens with mentally dictatorial digital processes. The days of unwieldy algorithms are already here, and they’re impacting processes in ways we may not even realize.

|

|

6. To solve the equation of corrupted algorithms, we need widespread knowledge and intentional standards.

The potential of the algorithm is multitudinous—its influence bleeds into increasingly significant fields of society, potentially altering billions of lives with a few lines of coding. Algorithmic models underlie the justice system, education, advertising, social media, credit scores, work schedules, and even auto insurance. If people fail to recognize the potency of these algorithms and their capacity to perpetuate inequalities and erode individuals’ abilities to think and choose freely, society may find itself an engineered product of its own wily creation.

To stem the reach of these destructive models, the creators must recognize the immense power they hold and where that power can go haywire. Moreover, the overarching systems that employ these algorithms must also be subject to particular standards. “Auditing” these models is a potentially helpful way to maintain their sought-after neutrality. Institutions like Princeton, Carnegie Mellon, and MIT are at the fore of these developments, sending out fleets of automated internet browsers to test the models people participate in and snoop for harmful flaws. Once people begin to locate and remedy these underlying issues, algorithms might become channeled toward more humane goals.

Culture is digitizing rapidly, and the presence of algorithms is one highly influential aspect of this transformation. Though we should remain informed of the oftentimes deceptive tug of the algorithm, we also should request more information about the data organizations gather to fuel these models. While culture must acknowledge algorithms for the fallible lines of mathematics they are and grow conscientious of their potential flaws, the statisticians who create them must acknowledge every individual’s entitlement to know the purpose and destination of their own data. Only then will organizations begin building algorithms for good and calculate humanity into their equations.

|

|

This newsletter is powered by Thinkr, a smart reading app for the busy-but-curious. For full access to hundreds of titles — including audio — go premium and download the app today.

|

|

Was this email forwarded to you? Sign up here.

Want to advertise with us? Click

here.

|

Copyright © 2026 Veritas Publishing, LLC. All rights reserved.

311 W Indiantown Rd, Suite 200, Jupiter, FL 33458

|

|

|